Large language models (LLMs) play a crucial role in a range of applications, however, their significant memory consumption, particularly the key-value (KV) cache, makes them challenging to deploy efficiently. Researchers from the ShanghaiTech University and Shanghai Engineering Research Center of Intelligent Vision and Imaging offered an efficient method to decrease memory consumption in the KV cache of transformer decoders, thereby enhancing throughput and reducing latency.

The KV cache, which stores keys and values during generation, is responsible for over 30% of GPU memory consumption. Although there exist methods to alleviate this burden, such as compressing KV sequences or implementing dynamic cache eviction policies, these approaches often do not fully address memory fragmentation issues.

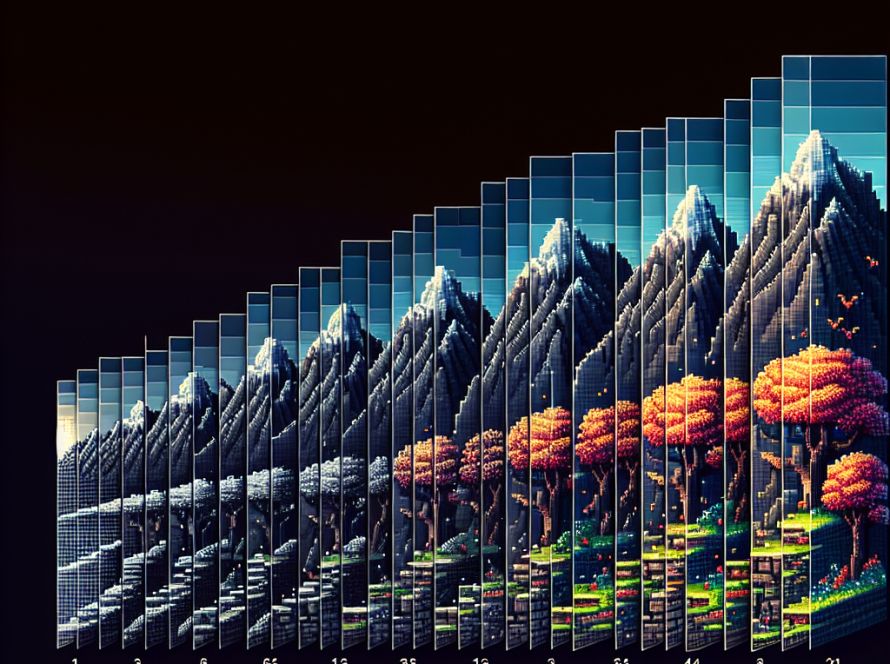

The new method introduces a concept known as ‘paged attention’ to mitigate memory fragmentation. Strategies include incrementally compressing token spans, pruning non-essential tokens, and storing only crucial tokens, among others. The novel approach involves pairing the queries of all layers with the keys and values of only the top layer, resulting in a drastic decrease in memory requirements as only one layer’s keys and values need to be cached, without additional computational overhead.

The model developed utilises a structure similar to standard transformers’ cross-attention by attending only to the key and values of the top layer. This technique allows the queries of all layers to be paired with the contents of the top layer only, thus eliminating the need for the caching and computing of KVs for other layers and consequently conserving memory and computation resources.

To prevent possible issues arising from each token attending to itself, the model masks the diagonal of the attention matrix and uses zero vectors as dummy KVs for the first token. This model still retains some features of standard attention to ensure the preservation of the syntactic-semantic patterns in transformer models for competitive performance.

The new method was evaluated using models with varying numbers of parameters and different GPUs, with resulting improvements in latency, throughput, and feasible batch sizes, compared to standard models. The developed approach also performed well on commonsense reasoning tasks and proved effective in processing infinite-length tokens. The new method does require extended pre-training due to its iterative training process, but it offers robust memory reduction and enhanced throughput with minimal performance loss in LLMs.

This model is compatible with memory-saving techniques like StreamingLLM. Overall, the research contributes significantly to the efficiency and effectiveness of deploying LLMs. The research findings and related code are available via the project’s paper and GitHub, respectively.