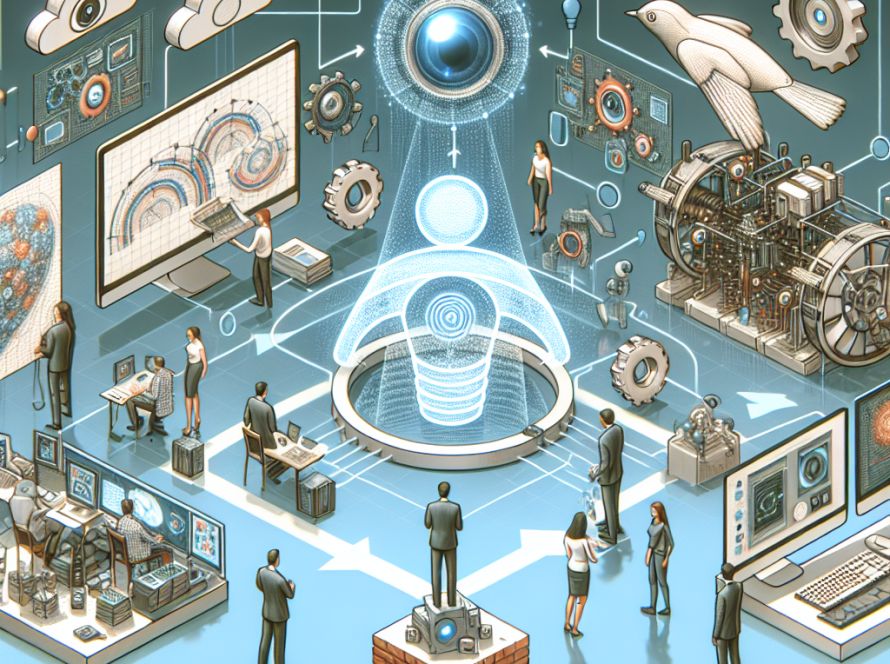

While artificial intelligence (AI) chatbots like ChatGPT are capable of a variety of tasks, concerns have been raised about their potential to generate unsafe or inappropriate responses. To mitigate these risks, AI labs use a safeguarding method called “red-teaming”. In this process, human testers aim to elicit undesirable responses from the AI, informing its development to ensure these responses aren’t replicated.

However, the efficiency of red-teaming is contingent on the ability to imagine all possible toxic prompts a user could input. To better this process, researchers from MIT’s Improbable AI Lab and the MIT-IBM Watson AI Lab have developed a method to automate red-teaming. Their machine learning approach trains large languages models to automatically produce diverse prompts that reveal a chatbot’s scope for undesirable responses.

This is achieved by teaching the large language model to be “curious”, encouraging it to generate novel prompts that generate toxic responses from the chatbot under review. This method importantly produced more distinct prompts and successfully drew out toxic responses from a previously deemed safe chatbot. Accordingly, this could revolutionise the red-teaming process, making quality assurance much faster and effective.

The research team implemented reinforcement learning, a trial-and-error process that rewards the language model for generating toxic responses. A technique named “curiosity-driven exploration,” was deployed, motivating the large language model to generate varied prompts in terms of words used, sentence patterns, and meaning. The model’s curiosity is maintained by avoiding repeated prompts which no longer generate curiosity. As the model interacts with the chatbot, a safety classifier rates the toxicity of the chatbot’s response, rewarding the model based on this rating.

An alteration to the reward signal in the reinforcement learning setup was also introduced to instigate curiosity in the language model. To prevent the model from generating meaningless text, a naturalistic language bonus was added to the training objective. This upgrade significantly improved the responses generated by their red-team model. The team managed to draw out 196 toxic responses from a supposedly “safe” chatbot made safe through human feedback.

The research team sees their work as critical to the future of AI model releases, which they predict will only increase. Ensuring the safety of these models before public release will require large scale quality assurance methods like the one they’ve developed. Future work will aim to increase the diversity of the subjects covered in prompts generated by the red-team model, as well as the potential integration of large language models as toxicity classifiers. The research could be pivotal in ensuring that the rapidly growing field of AI remains safe and trustworthy.