Large Language Models (LLMs) have transformed our interactions with AI, notably in areas such as conversational chatbots. Their efficacy is heavily reliant on high-quality instruction data used post-training. However, the traditional ways of post-training, which involve human annotations and evaluations, face issues such as high cost and limited availability of human resources. This calls for a more automated and scalable approach for continuous improvement of LLMs.

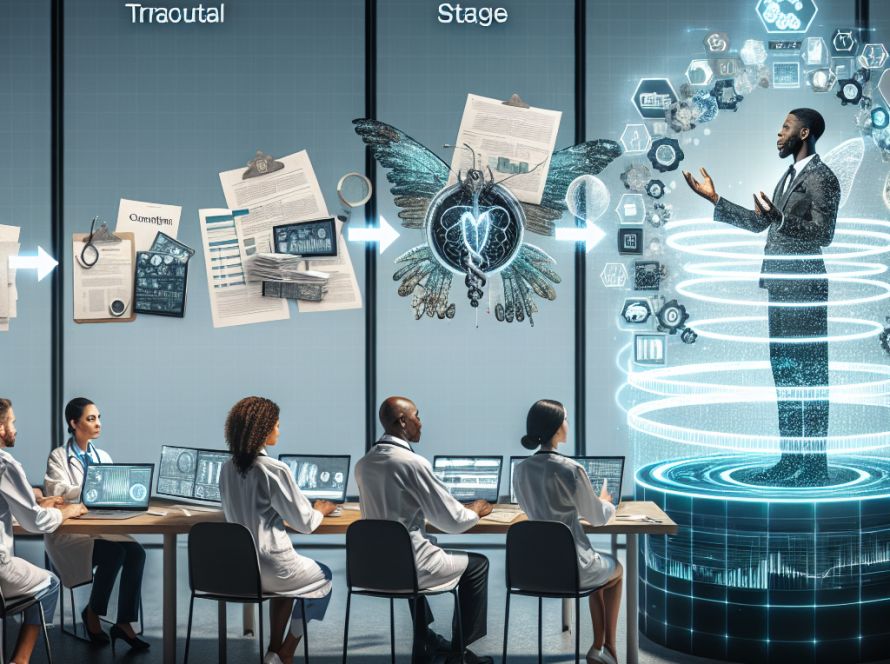

In a bid to overcome this, researchers from Microsoft Corporation, Tsinghua University, and SIAT-UCAS introduced Arena Learning, a novel method that substitutes manual processes with AI. The technique simulates iterative battles among different language models on a wide set of instructions. The outcomes, referred to as AI-annotated battle results, are used to repetitively fine-tune and enhance the models through supervised learning and reinforcement learning. In essence, the method forms a self-feeding loop for data, facilitating efficient post-training of the LLMs.

The approach features an offline chatbot arena which approximates performance rankings among diverse models using another specialized model, referred to as the “judge model”. This model judges the quality, relevance, and appropriateness of responses from the battling models. This automation drastically reduces the costs and restrictions associated with manual evaluations, facilitating large-scale data generation for training. As a part of the process, the target model undergoes continuous updates and improvements, to remain competitive with top-tier models.

The effectiveness of Arena Learning was validated through experimental results which displayed substantial performance improvements in models trained under it. The fully AI-driven training and evaluation pipeline provided a 40-fold efficiency improvement compared to the human-based LMSYS Chatbot Arena. To do this, the researchers used WizardArena, an offline evaluation set designed to balance complexity and diversity. The results were found to closely align with those produced by the LMSYS Chatbot Arena, confirming the reliability and cost-effectiveness of Arena Learning as an alternative.

The researchers also introduced the offline test set, WizardArena, to demonstrate its reliability for evaluating different LLMs by predicting their Elo rankings. The outcomes highlighted the efficiency of Arena Learning in generating large-scale synthetic data to continuously refine LLMs through diverse training strategies.

In conclusion, Arena Learning offers an efficient method for post-training LLMs through automation. It eliminates the need for human evaluators, ensuring continuous and cost-effective enhancement of language models. The method, which uses iterative training processes and simulated battles, has proven highly effective in generating large-scale training data for LLMs. This revolutionary approach to training LLMs underscores the potential of AI-powered solutions in creating scalable and efficient language models enhancements. This new research has the potential to greatly enhance the performance and efficiency of natural language processing models.