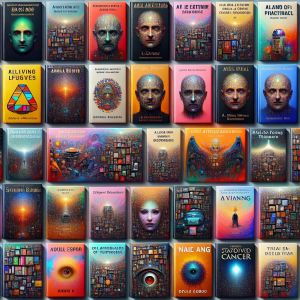

Synthetic AI-created books on Amazon cover King’s cancer prognosis

After King Charles disclosed his recent cancer diagnosis, Buckingham Palace warns it may resort to legal action against the publication of artificial intelligence (AI) generated books on Amazon, which falsely claim insider insight on the king’s health status. These publications not only inaccurately disclose details about his medical condition but also speculate on his treatments.