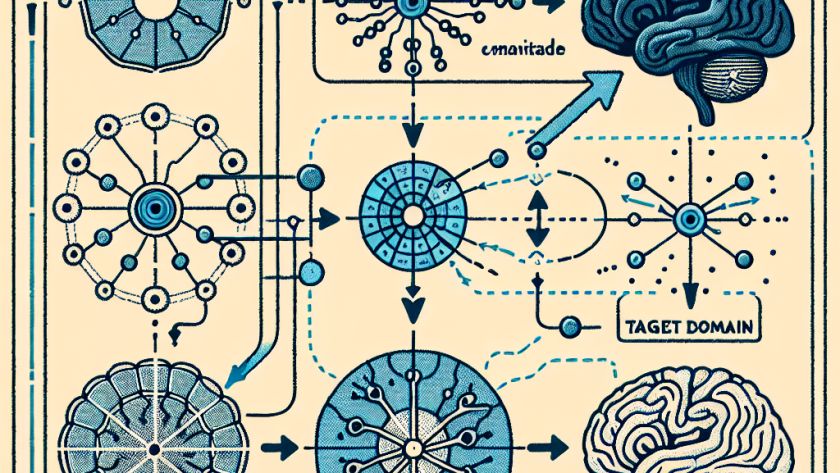

AI integration into low-powered Internet of Things (IoT) devices such as microcontrollers has been enabled by advances in hardware and software. Holding back deployment of complex Artificial Neural Networks (ANNs) to these devices are constraints such as the need for techniques such as quantization and pruning. Shifts in data distribution between training and operational environments…