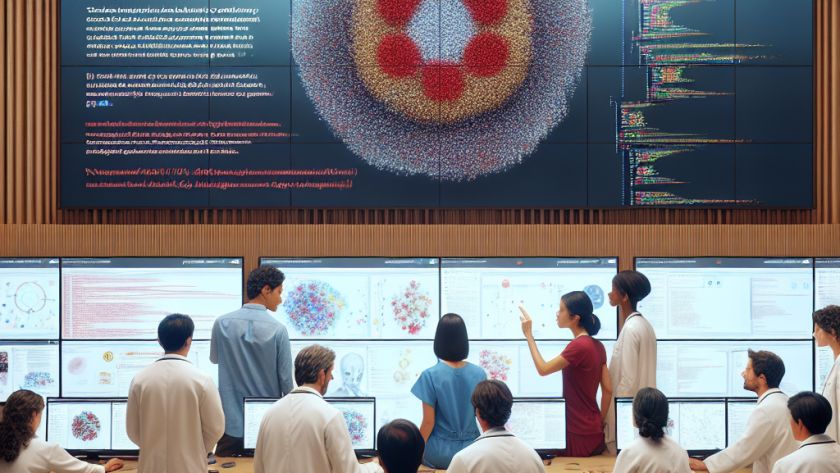

Large Language Models (LLMs) for Information Retrieval (IR) applications, such as those used for web search or question-answering systems, currently base their effectiveness on human-crafted prompts for zero-shot relevance ranking – ranking items by how closely they match the user's query. Manually creating these prompts for LLMs is time-consuming and subjective. Additionally, this method struggles…