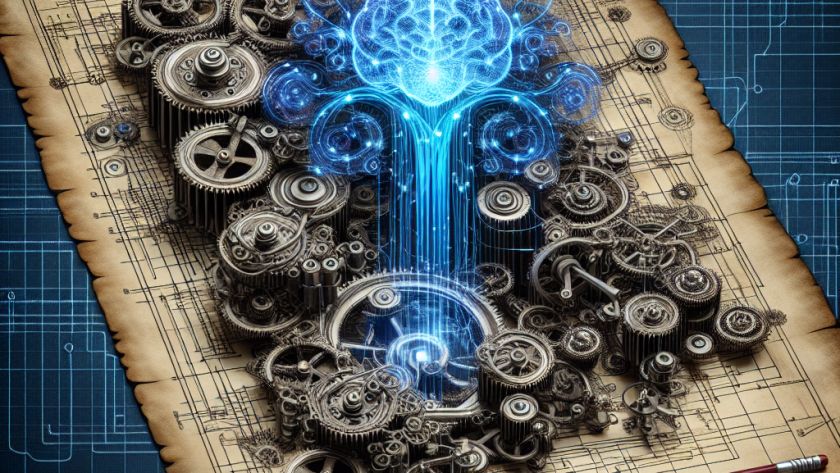

Data-driven techniques, such as imitation and offline reinforcement learning (RL), that convert offline datasets into policies are seen as solutions to control problems across many fields. However, recent research has suggested that merely increasing expert data and finetuning imitation learning can often surpass offline RL, even if RL has access to abundant data. This finding…