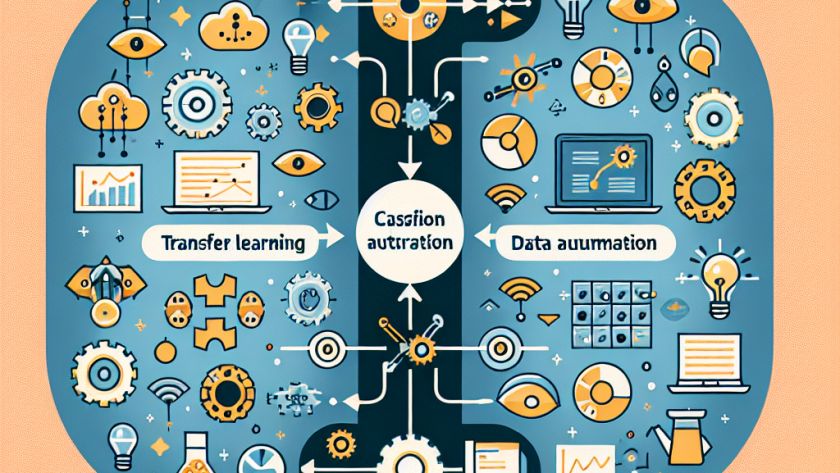

Machine learning is a crucial domain where differential privacy (DP) and selective classification (SC) play pivotal roles in safeguarding sensitive data. DP adds random noise to protect individual privacy while retaining the overall utility of the data, while SC chooses to refrain from making predictions in cases of uncertainty to enhance model reliability. These components…