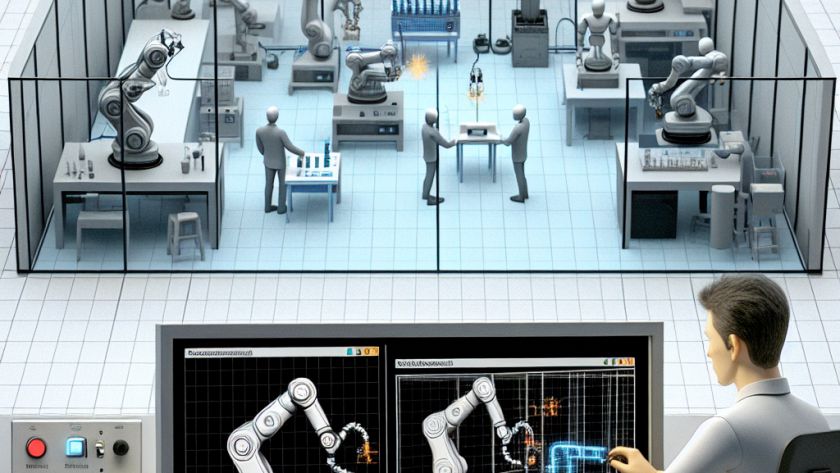

Reinforcement Learning (RL) involves learning decision-making through interactions with an environment and has been used effectively in games, robotics, and autonomous systems. RL agents aim to maximize their results and increase their efficiency by improving performance through continually adapting to new data. However, the RL agent's sample inefficiency impedes its practical application by necessitating comprehensive…