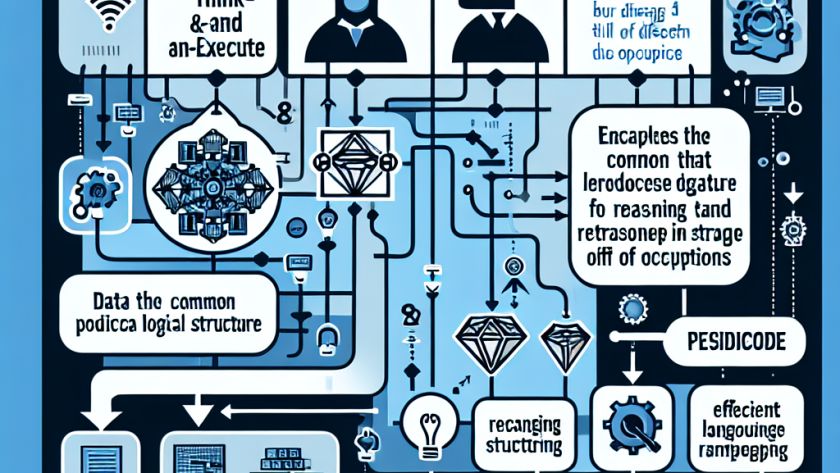

A group of researchers have created a novel assessment system, CodeEditorBench, designed to evaluate the effectiveness of Large Language Models (LLMs) in various code editing tasks such as debugging, translating, and polishing. LLMs, which have greatly advanced due to the rise of coding-related jobs, are mainly used for programming activities such as code improvement and…