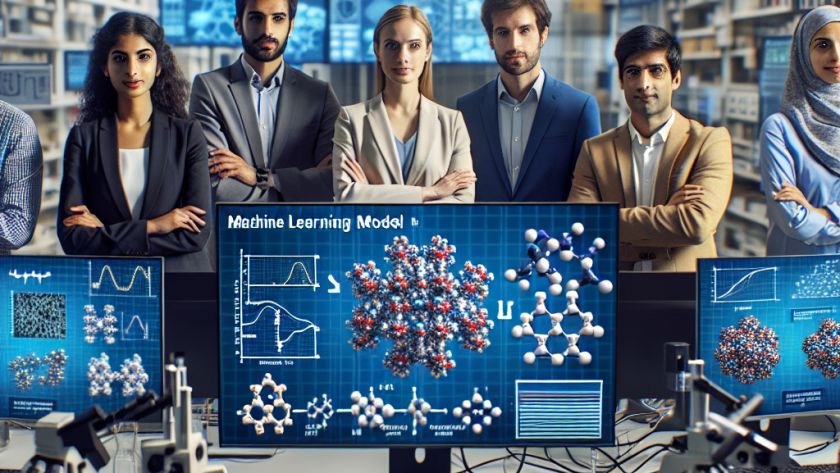

Researchers from Alibaba Group and the Renmin University of China have developed an advanced version of MultiModal Large Language Models (MLLMs) to better understand and interpret images rich in text content. Named DocOwl 1.5, this innovative model uses Unified Structure Learning to enhance the efficiency of MLLMs across five distinct domains: document, webpage, table, chart,…