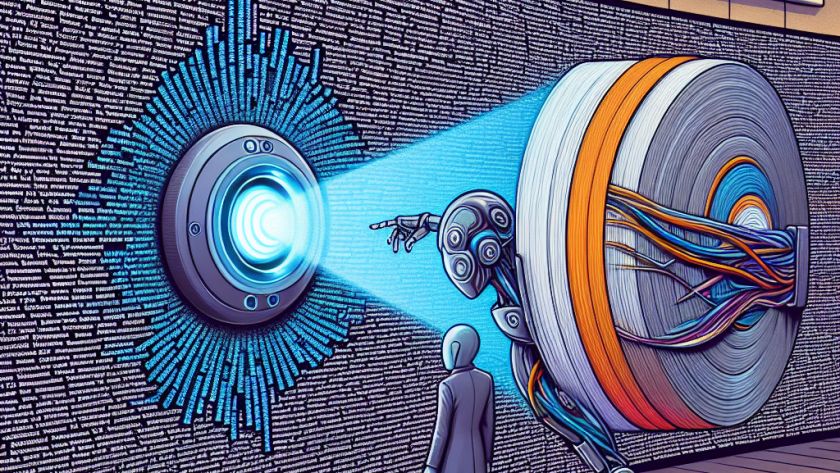

Within the field of Natural Language Processing (NLP), resolving references is a critical challenge. It involves identifying the context of specific words or phrases, pivotal to both understanding and successfully managing diverse forms of context. These can range from previous dialogue turns in conversation to non-conversational elements such as user screen entities or background processes.

Existing…