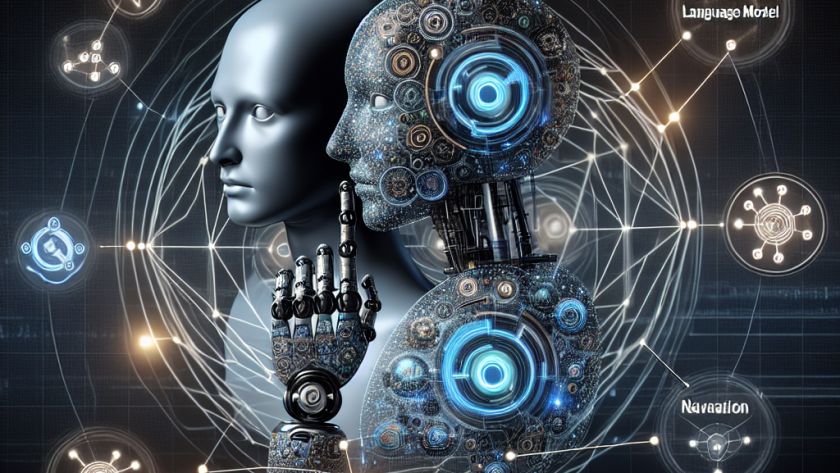

SciPhi has introduced a cutting-edge language model (LLM) named Triplex, designed for constructing knowledge graphs. This open-source tool is set to transform the way large sets of unstructured data are turned into structured formats, all while minimizing the associated cost and complexity. The model is available on platforms such as HuggingFace and Ollama, serving as…