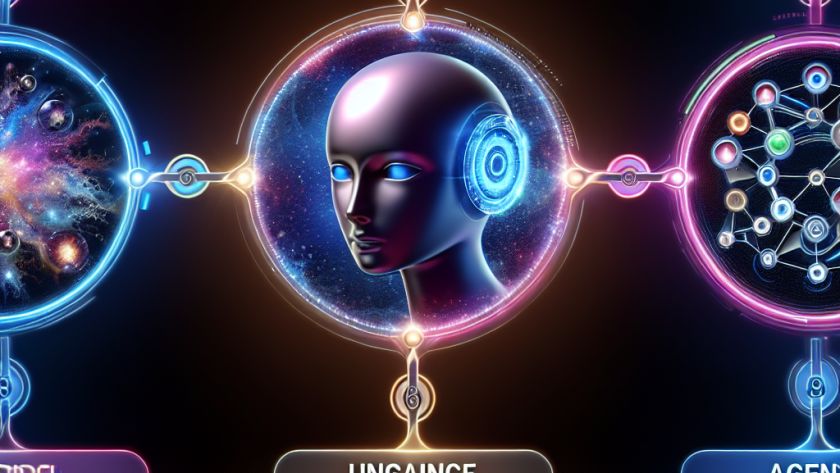

Large language models (LLMs) are known for their ability to contain vast amounts of factual information, leading to their effective use in factual question-answering tasks. However, these models often create appropriate but incorrect responses due to issues related to retrieval and application of their stored knowledge. This undermines their dependability and hinders their wide adoption…