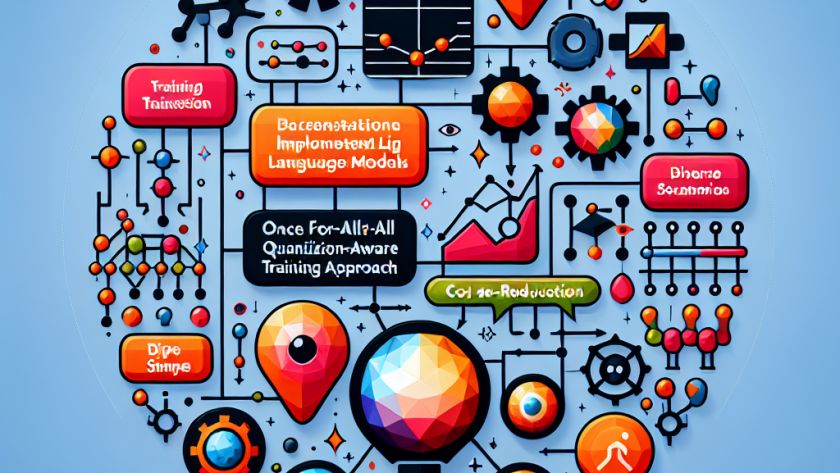

Large Language Models (LLMs) have shown great potential in natural language processing tasks such as summarization and question answering, using zero-shot and few-shot prompting approaches. However, these prompts are insufficient for enabling LLMs to operate as agents navigating environments to carry out complex, multi-step tasks. One reason for this is the lack of adequate training…

Intel, known for its leading-edge technology, offers a variety of AI courses that provide hands-on training for real-world applications. These courses are tailored to understanding and effectively using Intel's broad AI portfolio, with a focus on deep learning, computer vision, and more. The courses cover a wide range of topics, providing comprehensive learning for those…

The complexities and inefficiencies often associated with handling and extracting information from various file types like PDFs and spreadsheets are well-known challenges. Typical tools for the job usually fall short in several areas such as versatility, processing capacity, and maintenance. These setbacks emphasize the demand for an efficient and user-friendly solution for parsing and representing…

IBM is paving the way for AI advancements through their development of groundbreaking technologies, as well as a broad offer of extensive courses. Their AI-focused initiatives provide learners with the tools to utilize AI throughout a myriad of fields. IBM's courses furnish practical skills and knowledge that allow learners to effectively implement AI solutions and…

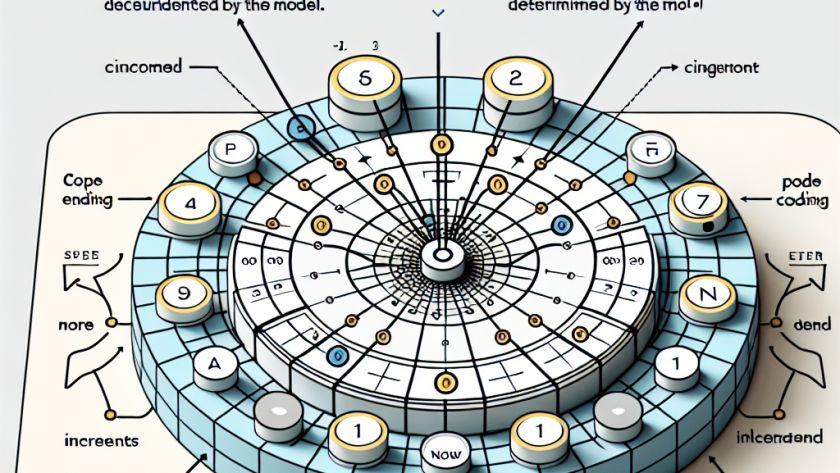

Text, audio, and code sequences depend on position information to decipher meaning. Large language models (LLMs) such as the Transformer architecture do not inherently contain order information and regard sequences as sets. The concept of Position Encoding (PE) is used here, assigning a unique vector to each position. This approach is crucial for LLMs to…

The improvement of logical reasoning capabilities in Large Language Models (LLMs) is a critical challenge for the progression of Artificial General Intelligence (AGI). Despite the impressive performance of current LLMs in various natural language tasks, their limited logical reasoning ability hinders their use in situations requiring deep understanding and structured problem-solving.

The need to overcome…