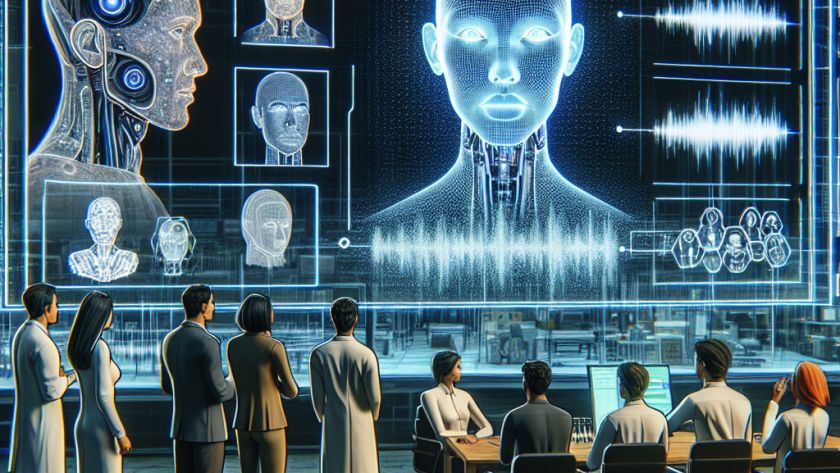

Exploring the interactions between reinforcement learning (RL) and large language models (LLMs) sheds light on an exciting area of computational linguistics. These models, largely enhanced by human feedback, show remarkable prowess in understanding and generating text that mirrors human conversation. Yet, they are always evolving to capture more subtle human preferences. The main challenge lies…