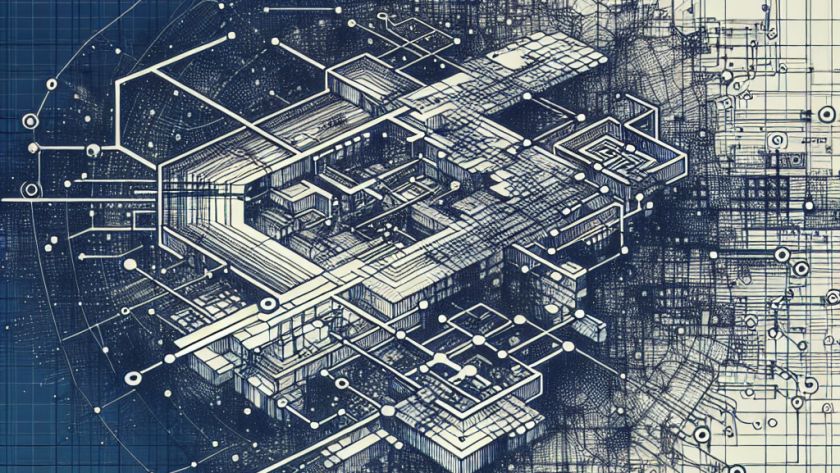

Machine learning researchers have developed a cost-effective reward mechanism to help improve how language models interact with video data. The technique involves using detailed video captions to measure the quality of responses produced by video language models. These captions serve as proxies for actual video frames, allowing language models to evaluate the factual accuracy of…