Researchers from Fudan University and Microsoft have developed a novel architecture for language and vision models (LMMs), called "DeepStack." The DeepStack model takes a different approach to processing visual data, thereby improving overall computational efficiency and performance.

Traditional LMMs typically integrate visual and textual data by converting images into visual tokens, which are then processed…

Instruct-MusicGen, a new method for text-to-music editing, has been introduced by researchers from C4DM, Queen Mary University of London, Sony AI, and Music X Lab, MBZUAI. This new approach aims to optimize existing models that require significant resources and fail to deliver precise results. Instruct-MusicGen utilizes pre-trained models and innovative training techniques to accomplish high-quality…

AI system vulnerabilities, particularly in large language models (LLMs) and multimodal models, can be manipulated to produce harmful outputs, raising questions about their safety and reliability. Existing defenses, such as refusal training and adversarial training, often fall short against sophisticated adversarial attacks and may degrade model performance.

Addressing these limitations, a research team from Black…

Large Language Models (LLMs) such as GPT-3 and Llama face significant inefficiencies during large-scale training due to hardware failures and network congestion. These issues can lead to a substantial waste of GPU resources and extended training durations. Existing methods to address these challenges, which involve basic fault tolerance and traffic management strategies, are often inefficient…

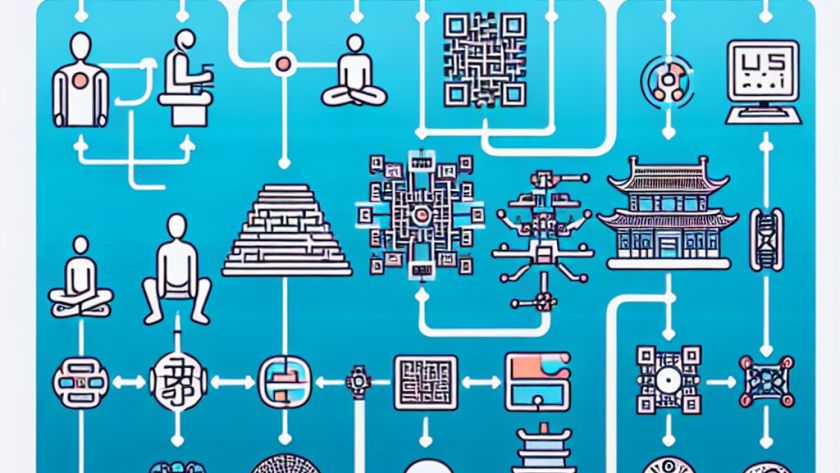

Natural Language Processing (NLP) aims to enable computers to understand and generate human language, facilitating human-computer interaction. Despite advancements in NLP, large language models (LLMs) often fall short when it comes to complex planning tasks, such as decision-making and organizing actions - abilities crucial in a diverse array of applications from daily tasks to strategic…

Large Language Models (LLMs) have revolutionized the field of Natural Language Processing (NLP). However, they often generate ungrounded or factually incorrect information, an issue informally known as 'hallucination'. This is particularly noticeable when it comes to Question Answering (QA) tasks, where even the most advanced models, such as GPT-4, struggle to provide accurate responses. The…

Large Language Models (LLMs) have transformed the field of Natural Language Processing (NLP), specifically in Question Answering (QA) tasks. However, their utility is often hampered by the generation of incorrect or unverified responses, a phenomenon known as hallucination. Despite the development of advanced models like GPT-4, issues remain in accurately answering questions related to changing…