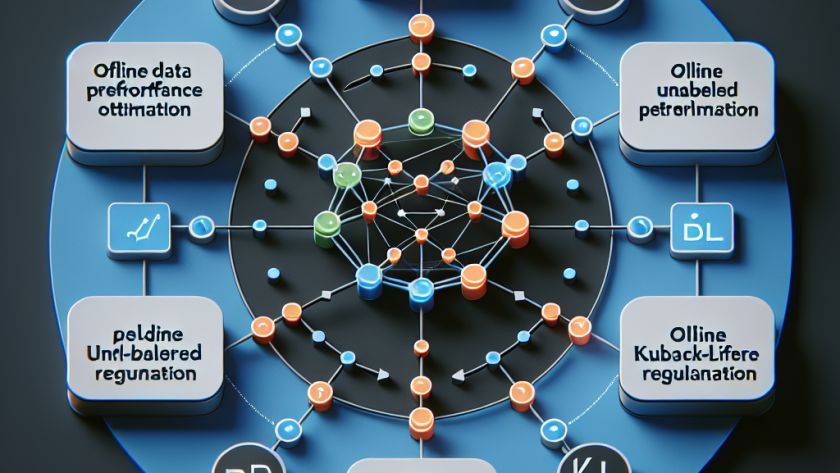

Relational databases are fundamental to many digital systems, playing a critical role in data management across a variety of sectors, including e-commerce, healthcare, and social media. Through their table-based structure, they efficiently organize and retrieve data that's crucial to operations in these fields, and yet, the full potential of the valuable relational information within these…