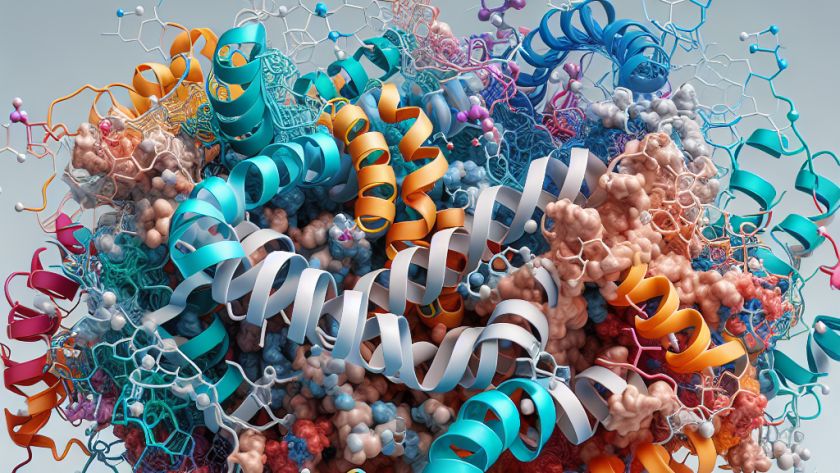

Artificial Intelligence (AI) technology researchers from multiple institutions including the Institute of Structural Biology, Technical University of Munich, and others have developed a novel approach to drug discovery, named MISATO. This innovative model is designed to enhance the process of drug design, a critical aspect within the broader field of computational chemistry and structural biology.…

Autoregressive language models (ALMs) have become invaluable tools in machine translation, text generation, and similar tasks. Despite their success, challenges persist such as high computational complexity and extensive GPU memory usage. This makes the need for a cost-effective way to operate these models urgent. Large language models (LLMs), which use KV Cache mechanism to enhance…

Large Language Models (LLMs), such as GPT-3 and ChatGPT, have been shown to exhibit advanced capabilities in complex reasoning tasks, outpacing standard, supervised machine learning techniques. The key to unlocking these enhanced abilities is the incorporation of a 'chain of thought' (CoT), a method that replicates human-like step-by-step reasoning processes. Importantly, the use of CoT…

Language models, a subset of artificial intelligence, are utilized in a myriad of applications including chatbots, predictive text, and language translation services. A significant challenge faced by researchers in Artificial Intelligence (AI) is making these models more efficient while also enhancing their ability to comprehend and process large amounts of data.

Imperative to the field of…

Artificial intelligence models, in particular large language models (LLMs), have made significant strides in generating coherent and contextually appropriate language. However, they sometimes create content that seems correct but is actually inaccurate or irrelevant, a problem often referred to as "hallucination". This can pose a considerable issue in areas where high factual accuracy is critical,…

The rapidly evolving field of research addressing hallucinations in vision-language models (VLVMs), or artificially intelligent (AI) systems that generate coherent but factually incorrect responses, is increasingly gaining attention. Especially important when applied in crucial domains like medical diagnostics or autonomous driving, the accuracy of the outputs from VLVMs, which combine text and visual inputs, is…

Artificial Intelligence (AI) systems, such as Vision-Language Models (VLVMs), are becoming increasingly advanced, integrating text and visual inputs to generate responses. These models are being used in critical contexts, such as medical diagnostics and autonomous driving, where accuracy is paramount. However, researchers have identified a significant issue in these models, which they refer to as…

Mixture-of-experts (MoE) architectures, designed for better scaling of model sizes and more efficient inference and training, present a challenge to optimize due to their non-differentiable, discrete nature. Traditional MoEs use a router network which directs input data to expert modules, a process that is complex and can lead to inefficiencies and under-specialization of expert modules.…