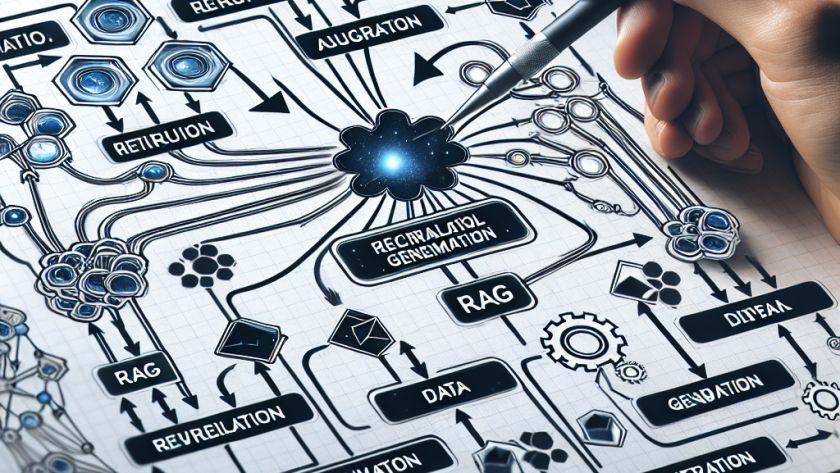

The researchers from Huazhong University of Science and Technology, the University of New South Wales, and Nanyang Technological University have unveiled a novel framework named HalluVault, aimed at enhancing the efficiency and accuracy of data processing in machine learning and data science fields. The framework is designed to detect Fact-Conflicting Hallucinations (FCH) in Large Language…