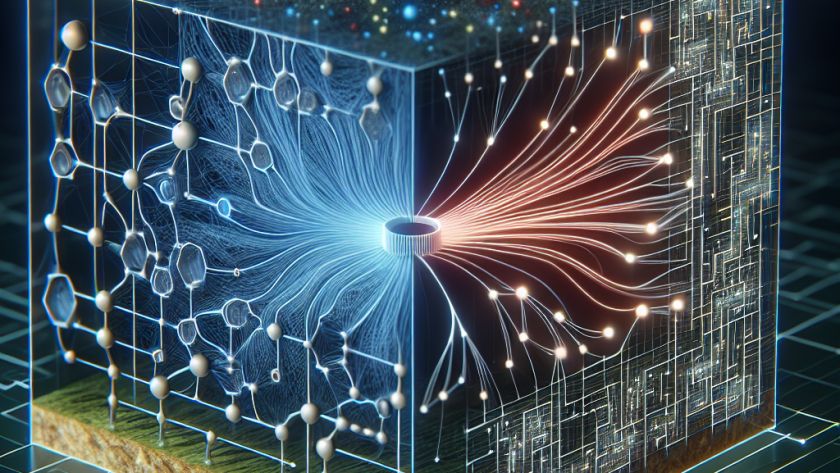

A team of researchers from MIT and other institutions has developed a method to stop the performance deterioration in AI language models involved in continuous dialogue, like the AI chatbot, ChatGPT. Named StreamingLLM, the solution revolves around a modification in the machine’s key-value cache, acting as a conversation memory. Conventionally, when the cache overflows, the…