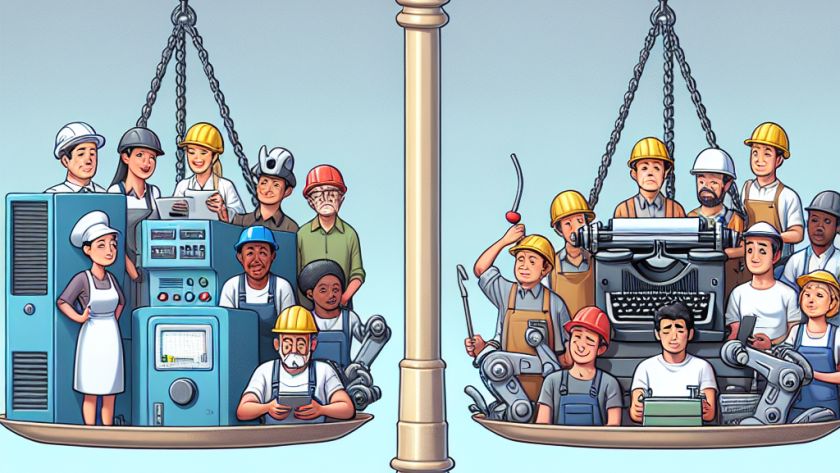

From a young age, humans showcase an impressive ability to merge their knowledge and skills in novel ways to construct solutions to problems. This principle of compositional reasoning is a critical aspect of human intelligence that allows our brains to create complex representations from simpler parts. Unfortunately, AI systems have struggled to replicate this capability…