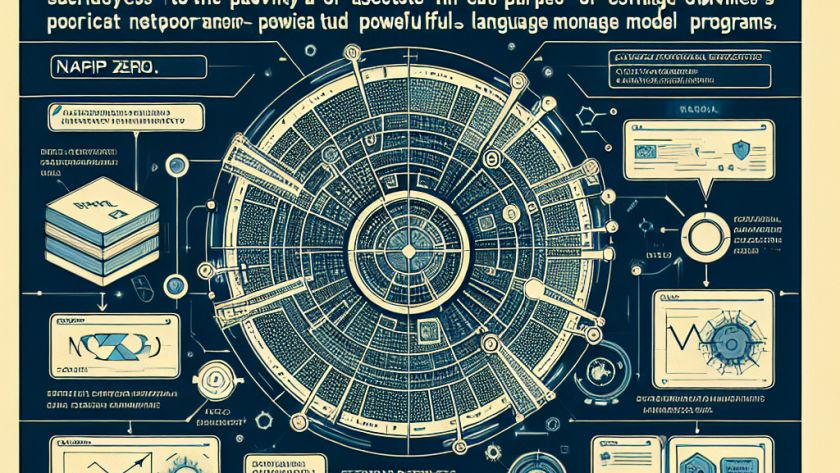

NuMind has unveiled NuExtract, a revolutionary text-to-JSON language model that represents a significant enhancement in structured data extraction from text, aiming to efficiently transform unstructured text into structured data.

NuExtract significantly distinguishes itself from its competitors through its innovative design and training methods, providing exceptional performance while maintaining cost-efficacy. It is designed to interact efficiently…