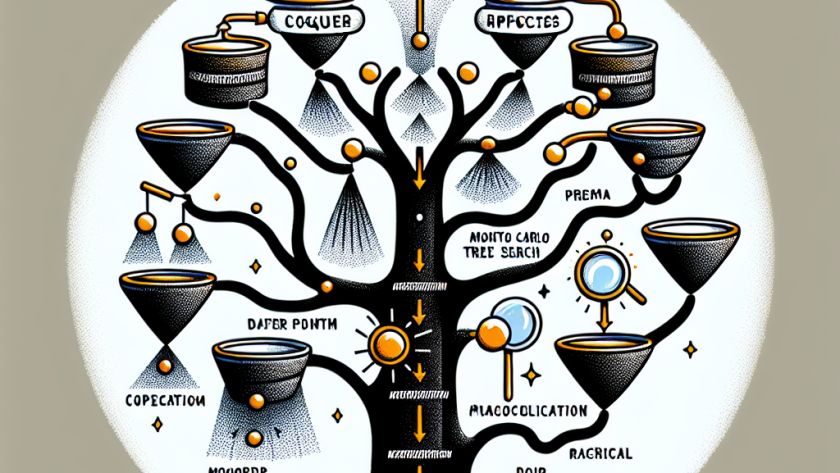

Algorithms, Artificial Intelligence, Chemical engineering, Chemistry, Computer science and technology, Defense Advanced Research Projects Agency (DARPA), Drug development, Electrical Engineering & Computer Science (eecs), Machine learning, MIT Schwarzman College of Computing, National Science Foundation (NSF), Pharmaceuticals, Research, School of Engineering, UncategorizedJune 18, 2024225Views0Likes0Comments