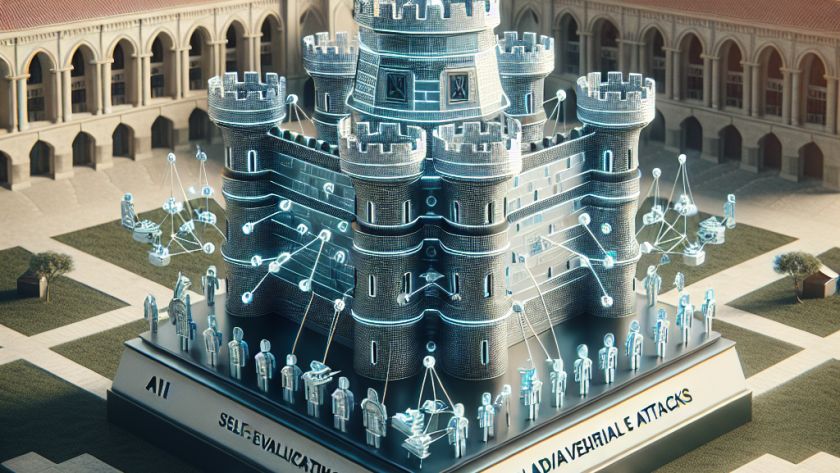

Recent research into Predictive Large Models (PLM) aims to align the models with human values, avoiding harmful behaviors while maximising efficiency and applicability. Two significant methods used for alignment are supervised fine-tuning (SFT) and reinforcement learning from human feedback (RLHF). RLHF, notably, commoditizes the reward model to new prompt-response pairs. However, this approach often faces…