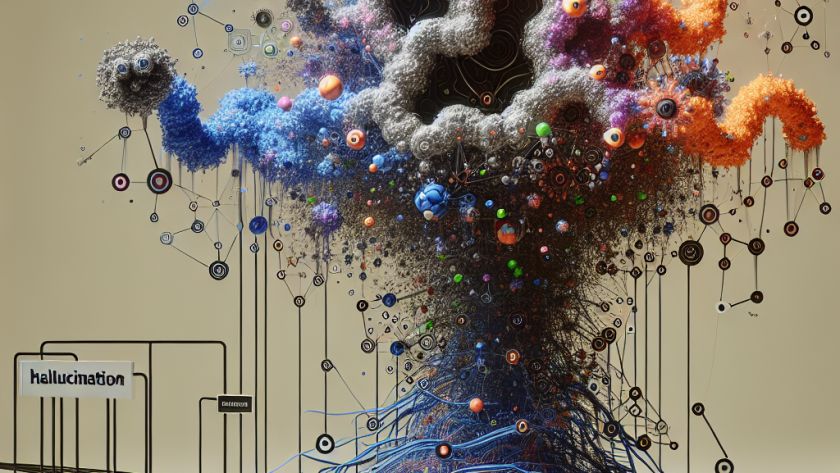

Large Language Models (LLMs) have revolutionized the field of Natural Language Processing (NLP). However, they often generate ungrounded or factually incorrect information, an issue informally known as 'hallucination'. This is particularly noticeable when it comes to Question Answering (QA) tasks, where even the most advanced models, such as GPT-4, struggle to provide accurate responses. The…