Large Language Models (LLMs) are pivotal for numerous applications including chatbots and data analysis, chiefly due to their ability to efficiently process high volumes of textual data. The progression of AI technology has amplified the need for superior quality training data, critical for the models' function and enhancement.

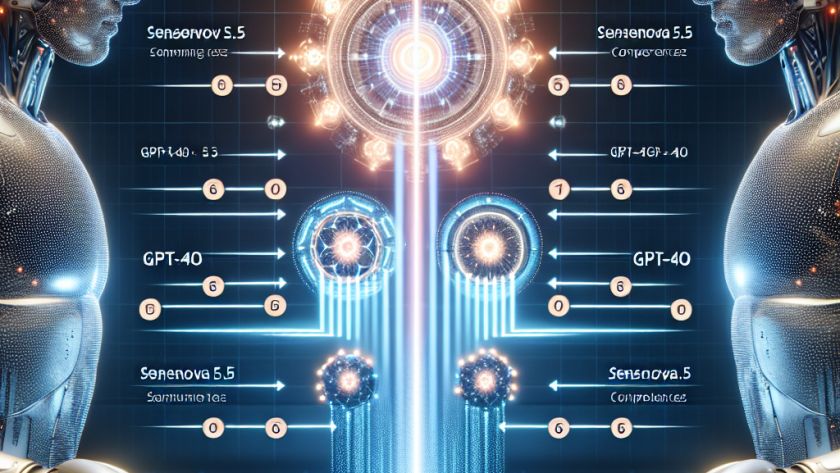

A major challenge in AI development is guaranteeing…