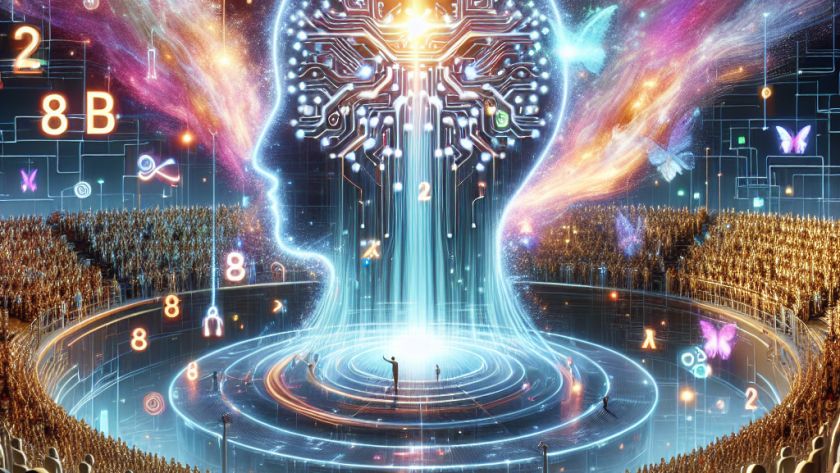

SambaNova has unveiled its latest Composition of Experts (CoE) system, the Samba-CoE v0.3, marking a significant advancement in the effectiveness and efficiency of machine learning models. The Samba-CoE v0.3 demonstrates industry-leading capabilities and has outperformed competitors such as DBRX Instruct 132B and Grok-1 314B on the OpenLLM Leaderboard.

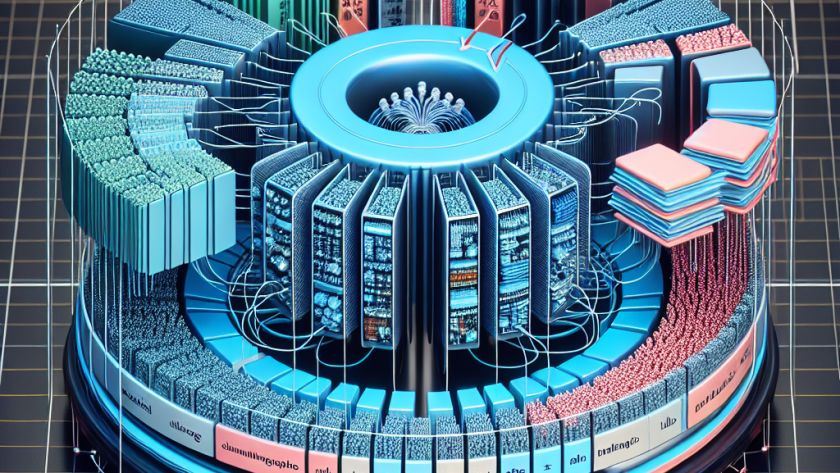

Samba-CoE v0.3 unveils a new and efficient routing…