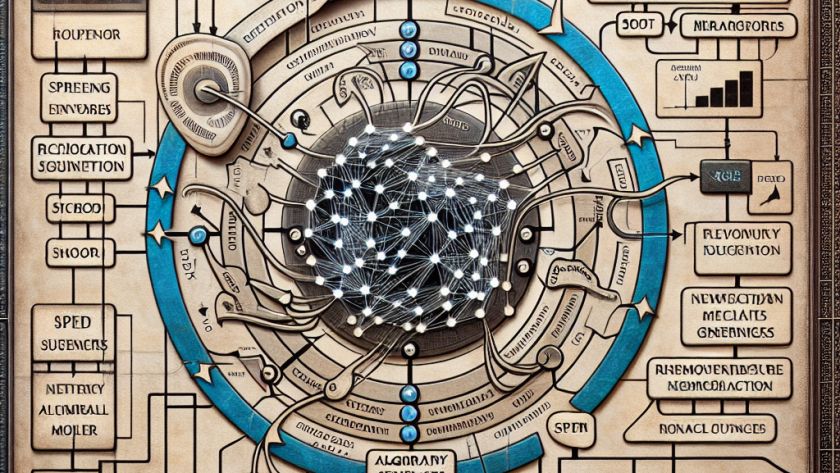

In the field of artificial intelligence (AI) research, language model evaluation is a vital area of focus. This involves assessing the capabilities and performance of models on various tasks, helping to identify their strengths and weaknesses in order to guide future developments and enhancements. A key challenge in this area, however, is the lack of…