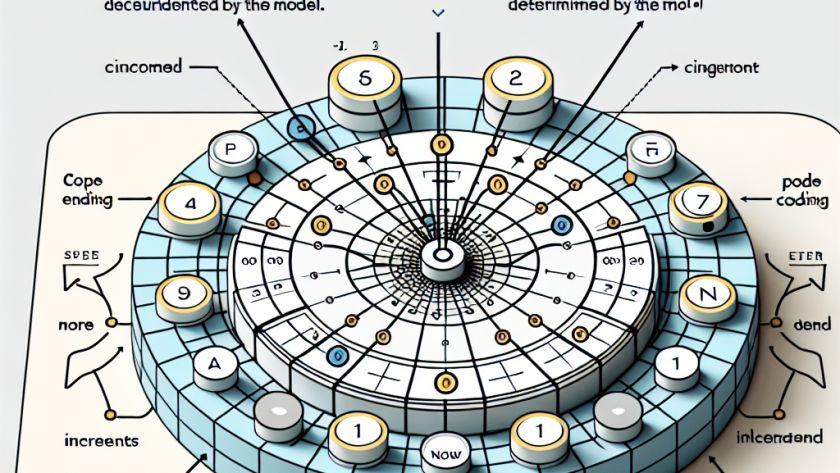

Text, audio, and code sequences depend on position information to decipher meaning. Large language models (LLMs) such as the Transformer architecture do not inherently contain order information and regard sequences as sets. The concept of Position Encoding (PE) is used here, assigning a unique vector to each position. This approach is crucial for LLMs to…

The improvement of logical reasoning capabilities in Large Language Models (LLMs) is a critical challenge for the progression of Artificial General Intelligence (AGI). Despite the impressive performance of current LLMs in various natural language tasks, their limited logical reasoning ability hinders their use in situations requiring deep understanding and structured problem-solving.

The need to overcome…

The existing language learning models (LLMs) are advancing yet have been struggling with incorporating new knowledge without forgetting the previous information, a situation termed as "catastrophic forgetting." The present methods, such as retrieval-augmented generation (RAG), are not very effective in tasks demanding integration of new knowledge from various passages due to encoding each passage in…

Natural Language Processing (NLP) has undergone a dramatic transformation in recent years, largely due to advanced language models such as transformers. The emergence of Retrieval-Augmented Generation (RAG) is one of the most groundbreaking achievements in this field. RAG integrates retrieval systems with generative models, resulting in versatile, efficient, and accurate language models. However, before delving…

Researchers from the University of Minnesota have developed a new method to strengthen the performance of large language models (LLMs) in knowledge graph question-answering (KGQA) tasks. The new approach, GNN-RAG, incorporates Graph Neural Networks (GNNs) to enable retrieval-augmented generation (RAG), which enhances the LLMs' ability to answer questions accurately.

LLMs have notable natural language understanding capabilities,…

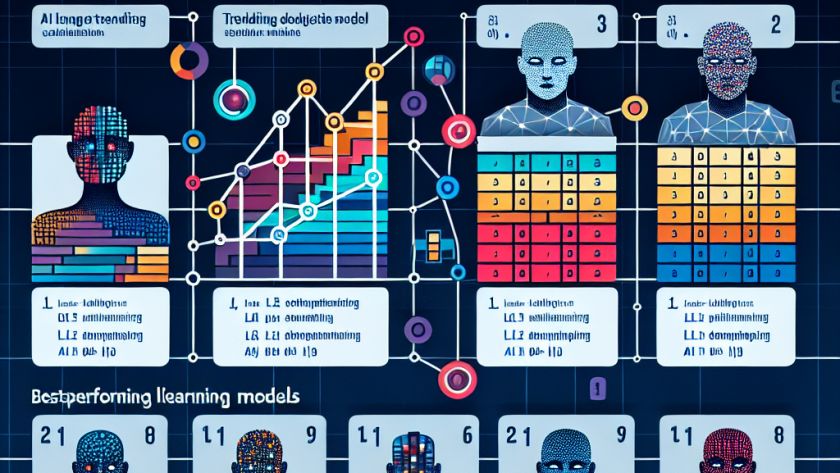

Scale AI's Safety, Evaluations, and Alignment Lab (SEAL) has unveiled SEAL Leaderboards, a novel ranking system designed to comprehensively gauge the trove of large language models (LLMs) becoming increasingly significant in AI developments. Solely conceived to offer fair, systematic evaluations of AI models, the innovatively-designed leaderboards will serve to highlight disparities and compare performance levels…