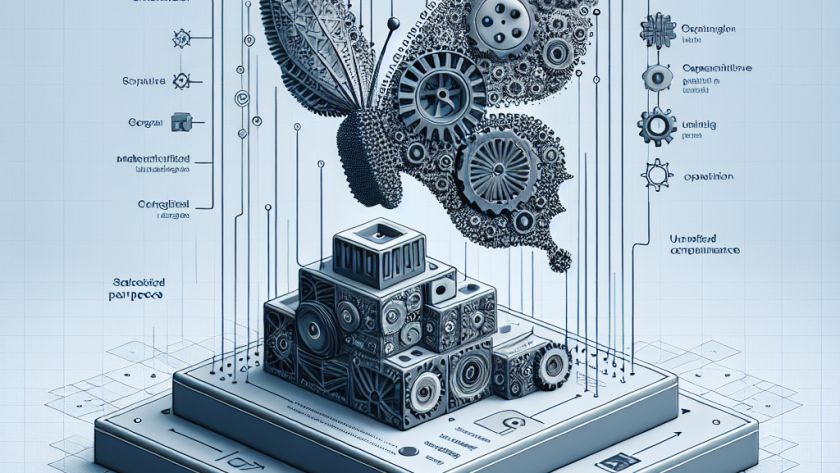

Memory is a crucial component of intelligence, facilitating the recall and application of past experiences to current situations. However, both traditional Transformer models and Transformer-based Large Language Models (LLMs) have limitations related to context-dependent memory due to the workings of their attention mechanisms. This primarily concerns the memory consumption and computation time of these attention…