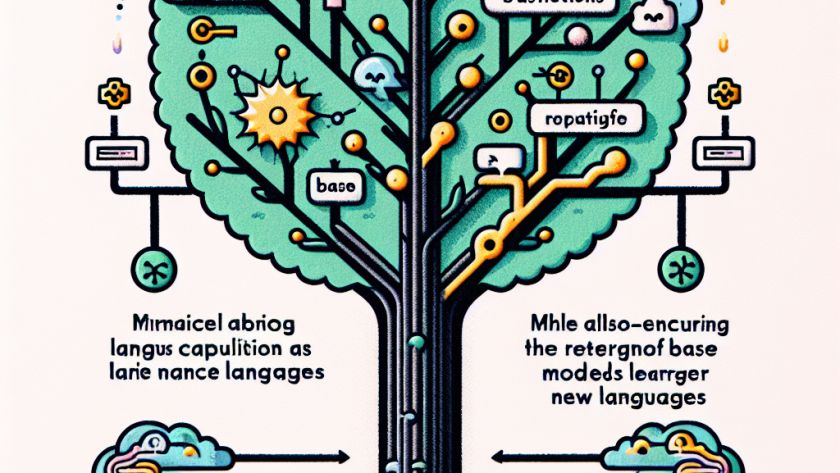

The field of robotics has seen significant changes with the integration of generative methods such as Large Language Models (LLMs). Such advancements are promoting the development of systems that can autonomously navigate and adapt to diverse environments. Specifically, the application of LLMs in the design and control processes of robots signifies a massive leap forward…