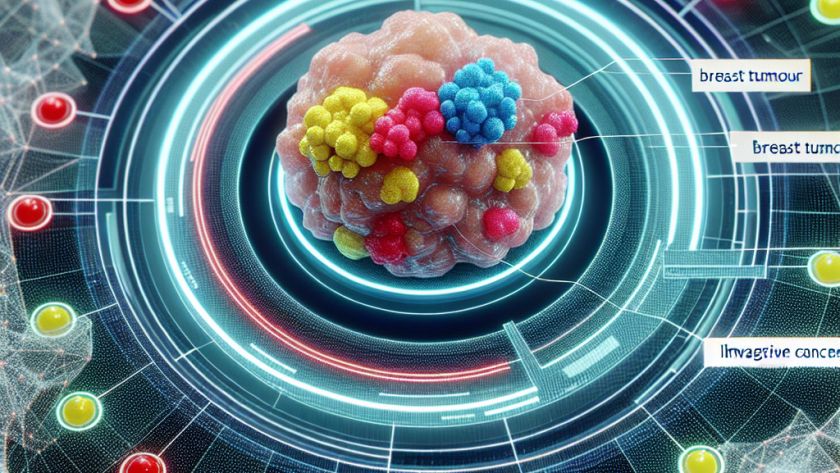

Artificial Intelligence, Broad Institute, Computer Science and Artificial Intelligence Laboratory (CSAIL), Computer science and technology, Electrical Engineering & Computer Science (eecs), Health care, Imaging, Machine learning, MIT Schwarzman College of Computing, National Institutes of Health (NIH), Research, School of Engineering, UncategorizedJuly 21, 2024225Views0Likes A research team from MIT, the Broad Institute of MIT and Harvard, and Massachusetts General Hospital has developed an artificial intelligence (AI) tool, named Tyche, that presents multiple plausible interpretations of medical images, highlighting potentially important and varied insights. This tool aims to address the often complex ambiguity in medical image interpretation where different experts…

Read More