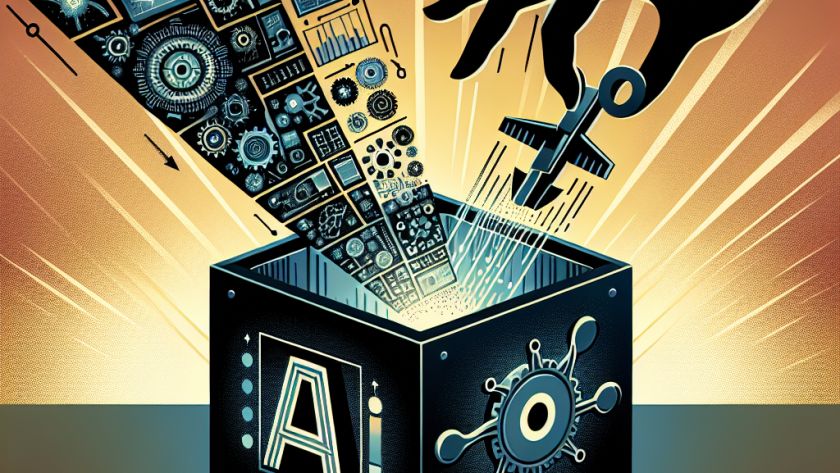

The research team at Microsoft has developed a new, more efficient method of teaching robots complex tasks. The new method, called Primitive Sequence Encoding (PRISE), enables machines to break down intricate activities into simpler tasks, and learn them step-by-step. This technique shows great potential for improving machines' overall learning capabilities and performance within a shorter…