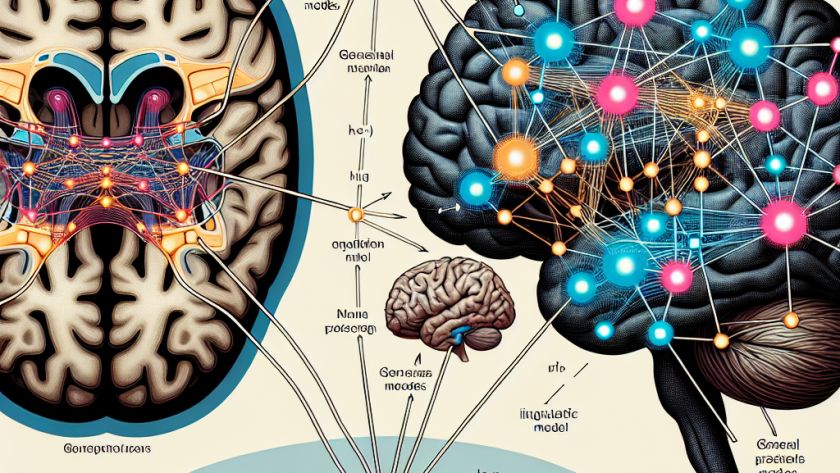

Natural language processing faces the challenge of precision in language models, with a particular focus on large language models (LLMs). These LLMs often produce factual errors or 'hallucinations' due to their reliance on internal knowledge bases.

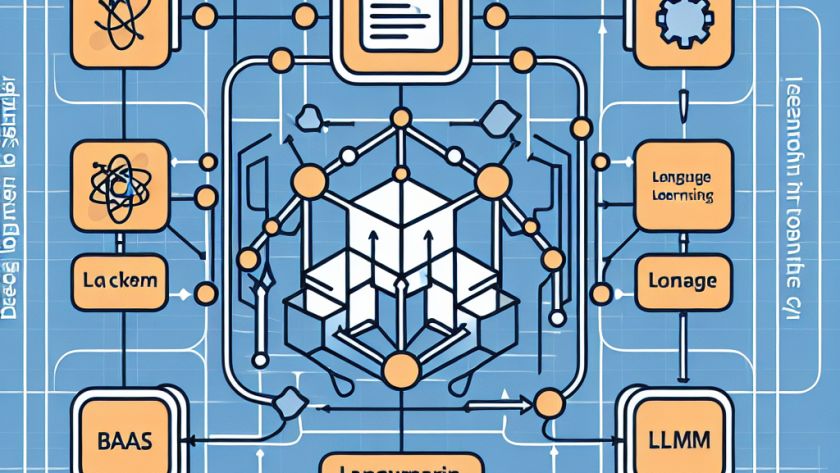

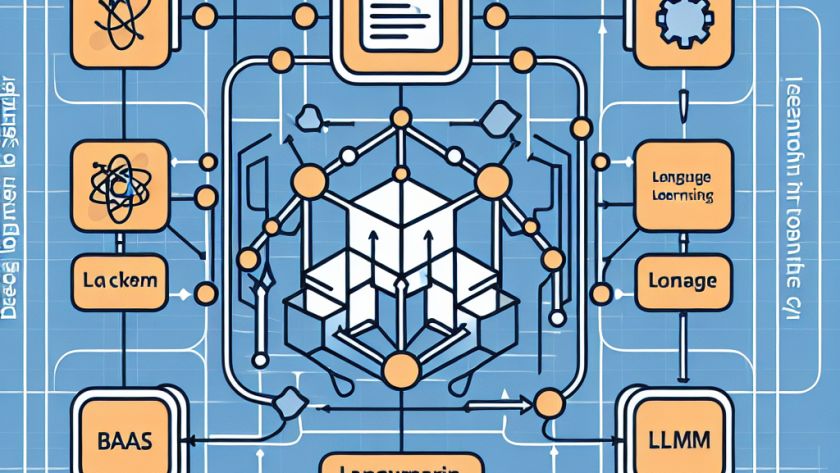

Retrieval-augmented generation (RAG) was introduced to improve the generation process of LLMs by including external, relevant knowledge. However, RAG’s effectiveness…