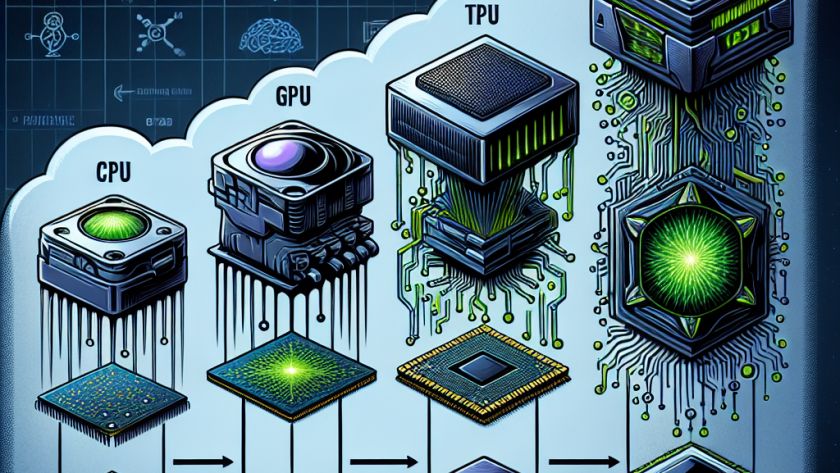

The proliferation of deep learning technology has led to significant transformations across various industries, including healthcare and autonomous driving. These breakthroughs have been reliant on parallel advancements in hardware technology, particularly in GPUs (Graphic Processing Units) and TPUs (Tensor Processing Units).

GPUs have been instrumental in the deep learning revolution. Although originally designed to handle computer…