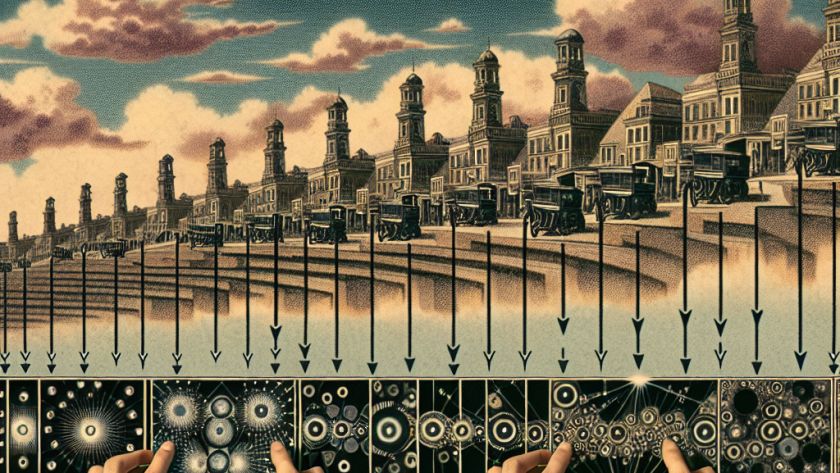

OpenAI has launched a new five-level classification framework to track its progress toward achieving Artificial Intelligence (AI) that can surpass human performance, augmenting its already substantial commitment to AI safety and future improvements.

At Level 1 - "Conversational AI", AI models like ChatGPT are capable of basic interaction with people. These chatbots can understand and respond…