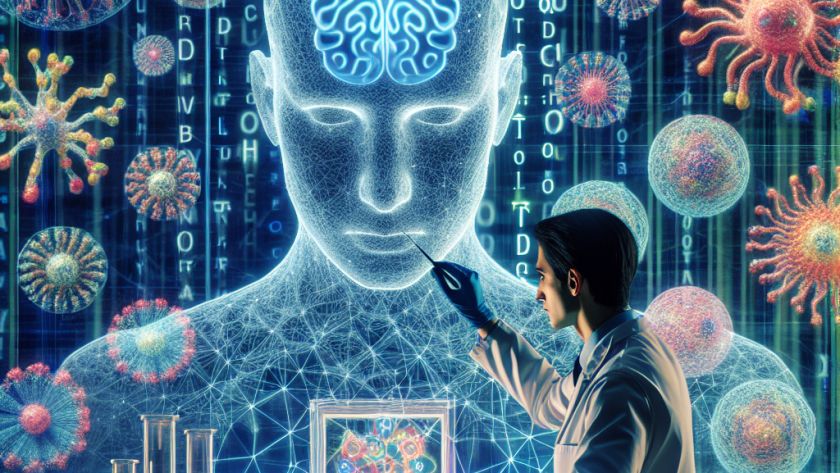

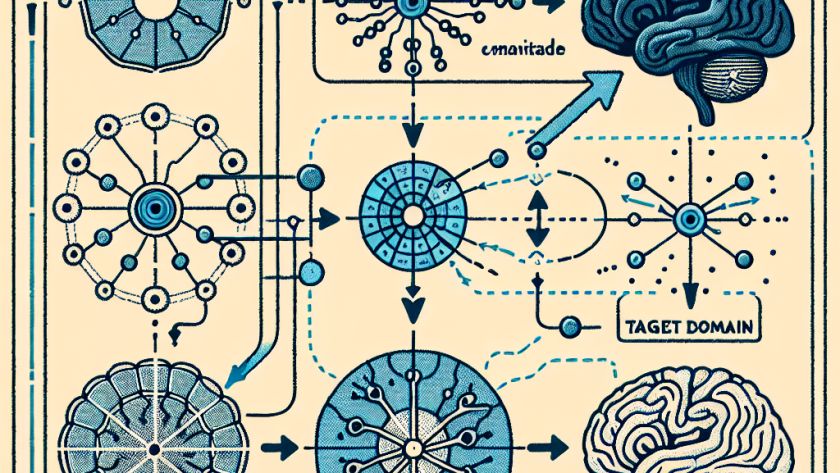

Large Language Models (LLMs) are advanced Artificial Intelligence tools designed to understand, interpret, and respond to human language in a similar way to human speech. They are currently used in various areas such as customer service, mental health, and healthcare, due to their ability to interact directly with humans. However, recently, researchers from the National…