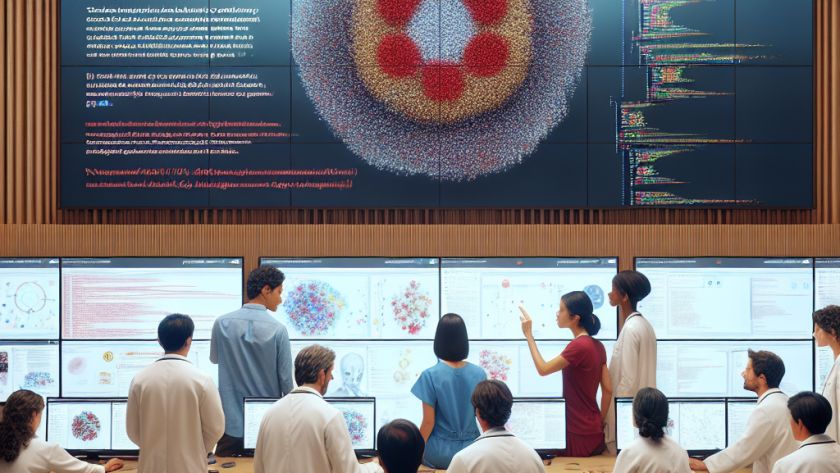

Long-Context Language Models (LCLMs) have emerged as a new frontier in artificial intelligence with the potential to handle complex tasks and applications without needing intricate pipelines that were traditionally used due to the limitations of context length. Unfortunately, their evaluation and development have been fraught with challenges. Most evaluations rely on synthetic tasks with fixed-length…