Google's Graph Mining team has developed a new processing algorithm, TeraHAC, capable of clustering extremely large data sets with hundreds of billions, or even trillions, of data points. This process is commonly used in activities such as prediction and information retrieval and involves the categorization of similar items into groups to better comprehend the relationships…

Google's Graph Mining team has unveiled TeraHAC, a clustering algorithm designed to process massive datasets with hundreds of billions of data points, which are often utilized in prediction tasks and information retrieval. The challenge in dealing with such massive datasets is the prohibitive computational cost and limitations in parallel processing. Traditional clustering algorithms have struggled…

The latest advancements in econometric modeling and hypothesis testing have signified a vital shift towards the incorporation of machine learning technologies. Even though progress has been made in estimating econometric models of human behaviour, there is still much research to be undertaken to enhance the efficiency in generating these models and their rigorous examination.

Academics from…

PyTorch recently launched the alpha version of its state-of-the-art solution, ExecuTorch, enabling the deployment of intricate machine learning models on resource-limited edge devices such as smartphones and wearables. Poor computational power and limited resources have traditionally hindered deploying such models on edge devices. PyTorch's ExecuTorch Alpha aims to bridge this gap, optimizing model execution on…

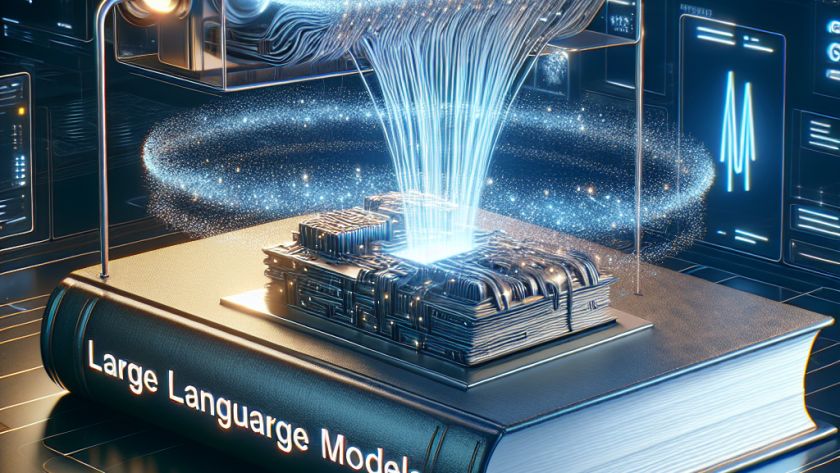

Advanced language models (LLMs) have significantly improved natural language understanding and are broadly applied in multiple areas. However, they can be sensitive to specific input prompts, prompting research into understanding this characteristic. Through exploring this behavior, prompts for learning tasks like zero-shot and in-context training are created. One such application, AutoPrompt, recognizes task-specific tokens to…

Gene editing, a vital aspect of modern biotechnology, allows scientists to precisely manipulate genetic material, which has potential applications in fields such as medicine and agriculture. The complexity of gene editing creates challenges in its design and execution process, necessitating deep scientific knowledge and careful planning to avoid adverse consequences. Existing gene editing research has…

Large Language Models (LLMs) are used in various applications, but high computational and memory demands lead to steep energy and financial costs when deployed to GPU servers. Research teams from FAIR, GenAI, and Reality Labs at Meta, the Universities of Toronto and Wisconsin-Madison, Carnegie Mellon University, and Dana-Farber Cancer Institute have been investigating the possibility…

Fine-tuning large language models (LLMs) is a crucial but often daunting task due to the resource and time-intensity of the operation. Existing tools may lack the functionality needed to handle these substantial tasks efficiently, particularly in relation to scalability and the ability to apply advanced optimization techniques across different hardware configurations.

In response, a new toolkit…

As parents, we try to select the perfect toys and learning tools by carefully matching child safety with enjoyment; in doing so, we often end up using search engines to find the right pick. However, search engines often provide non-specific results which aren't satisfactory.

Recognizing this, a team of researchers have devised an AI model named…