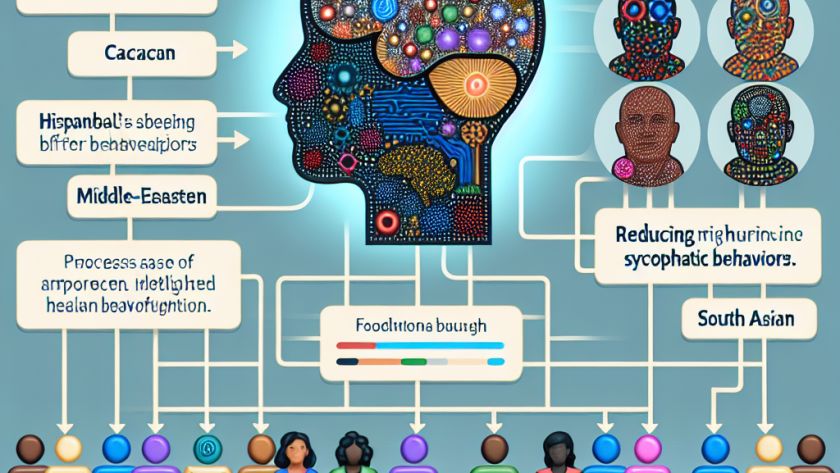

Large Language Models (LLMs) often exhibit judgment and decision-making patterns that resemble those of humans, posing them as attractive candidates for studying human cognition. They not only emulate rational norms such as risk and loss aversion, but also showcase human-like errors and biases, particularly in probability judgments and arithmetic operations. Despite their potential prospects, challenges…