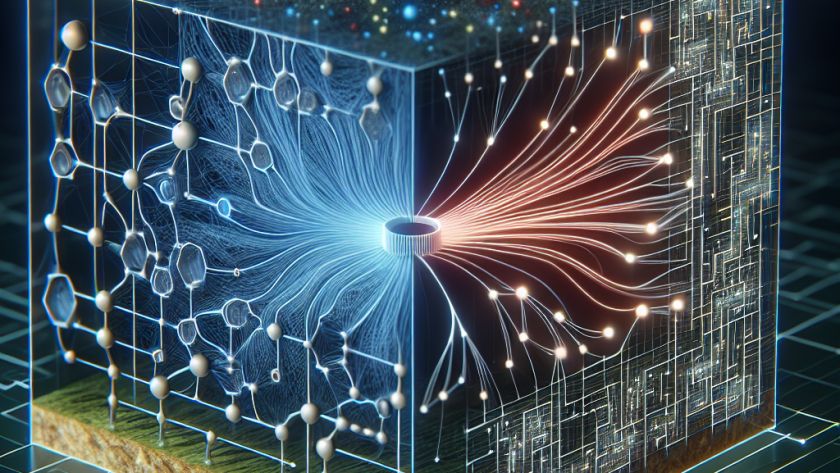

Digital pathology is transforming the analysis of traditional glass slides into digital images, accelerated by advancements in imaging technology and software. This transition has important implications for medical diagnostics, research, and education. The ongoing AI revolution and digital shift in biomedicine have the potential to expedite improvements in precision health tenfold. Digital pathology can be…