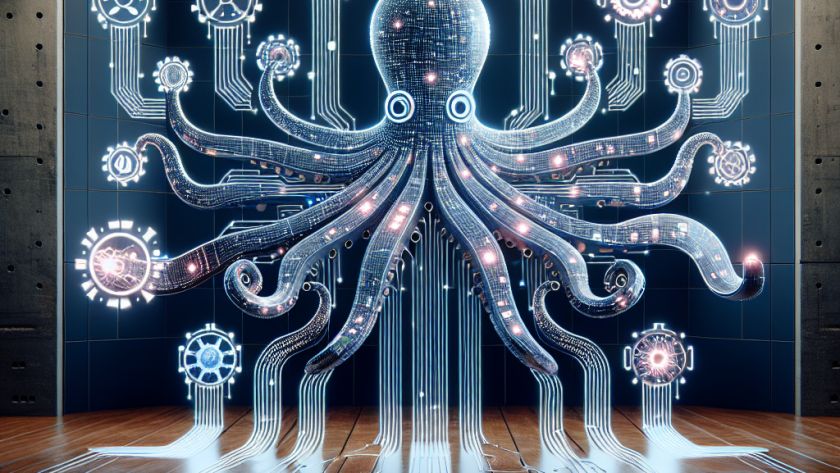

Multitask learning (MLT) is a method used to train a single model to perform various tasks simultaneously by utilizing shared information to boost performance. Despite its benefits, MLT poses certain challenges, such as managing large models and optimizing across tasks.

Current solutions to under-optimization problems in MLT involve gradient manipulation techniques, which can become computationally…