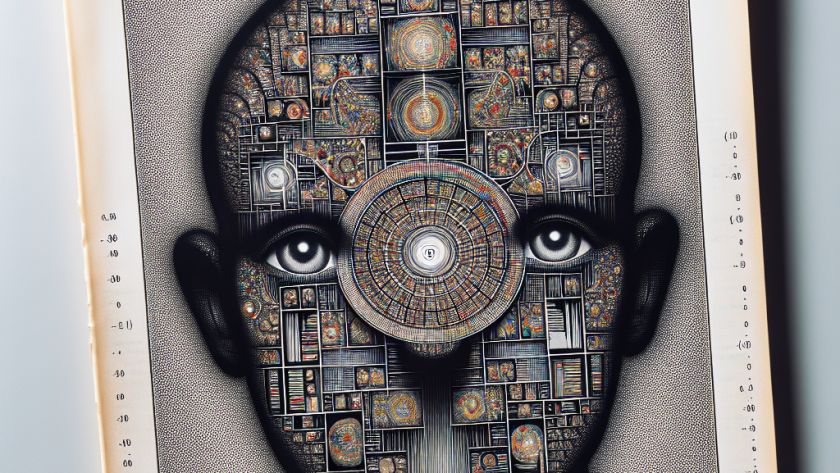

Advancements in large language models (LLMs) have greatly elevated natural language processing applications by delivering exceptional results in tasks like translation, question answering, and text summarization. However, LLMs grapple with a significant challenge, which is their slow inference speed that restricts their utility in real-time applications. This problem mainly arises due to memory bandwidth bottlenecks…