Researchers at the University of Texas at Austin and Rembrand have developed a new language model known as VOICECRAFT. This Nvidia's technology uses textless natural language processing (NLP), marking a significant milestone in the field as it aims to make NLP tasks applicable directly to spoken utterances.

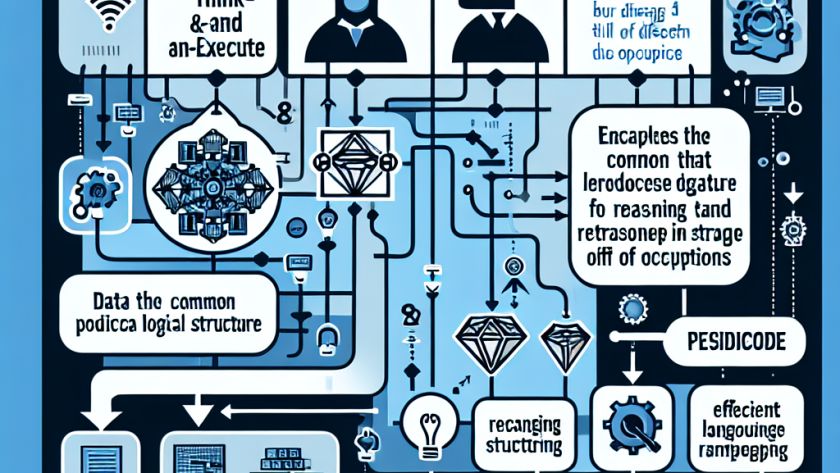

VOICECRAFT is a transformative, neural codec language model (NCLM)…