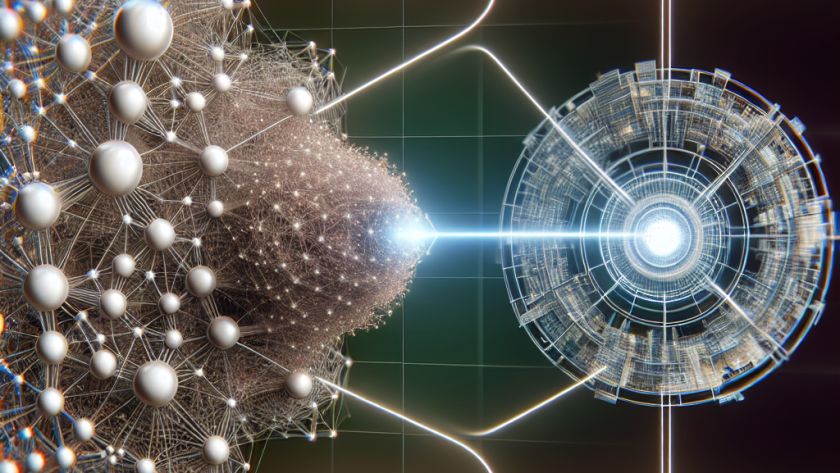

The field of software engineering has made significant strides with the development of Large Language Models (LLMs). These models are trained on comprehensive datasets, allowing them to efficiently perform a myriad of tasks which comprise of code generation, translation, and optimization. LLMs are increasingly being employed for compiler optimization. However, traditional code optimization methods require…