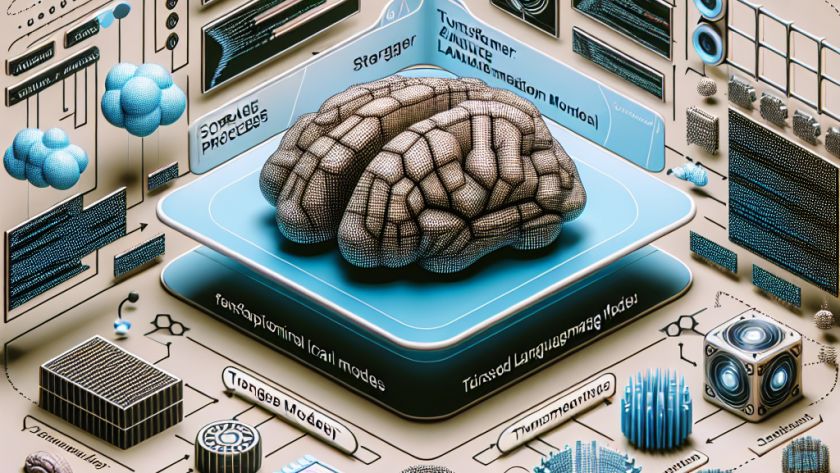

Bisheng is an innovative open-source platform released under the Apache 2.0 License, intended to expedite the creation of Large Language Model (LLM) applications. It is named after the creator of movable type printing, representing its possible impact on advancing knowledge distribution via intelligent applications. Bisheng is designed uniquely to accommodate both corporate users and technical…