Gene editing, a vital aspect of modern biotechnology, allows scientists to precisely manipulate genetic material, which has potential applications in fields such as medicine and agriculture. The complexity of gene editing creates challenges in its design and execution process, necessitating deep scientific knowledge and careful planning to avoid adverse consequences. Existing gene editing research has…

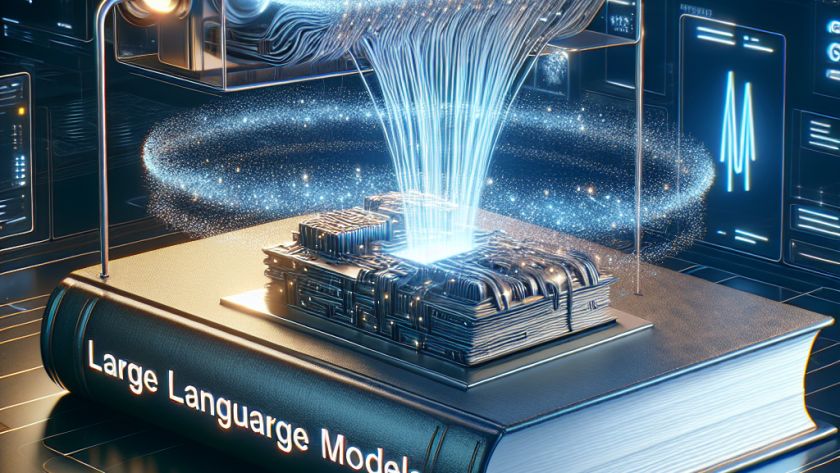

Large Language Models (LLMs) are used in various applications, but high computational and memory demands lead to steep energy and financial costs when deployed to GPU servers. Research teams from FAIR, GenAI, and Reality Labs at Meta, the Universities of Toronto and Wisconsin-Madison, Carnegie Mellon University, and Dana-Farber Cancer Institute have been investigating the possibility…

Fine-tuning large language models (LLMs) is a crucial but often daunting task due to the resource and time-intensity of the operation. Existing tools may lack the functionality needed to handle these substantial tasks efficiently, particularly in relation to scalability and the ability to apply advanced optimization techniques across different hardware configurations.

In response, a new toolkit…

As parents, we try to select the perfect toys and learning tools by carefully matching child safety with enjoyment; in doing so, we often end up using search engines to find the right pick. However, search engines often provide non-specific results which aren't satisfactory.

Recognizing this, a team of researchers have devised an AI model named…

Advancements in large language models (LLMs) have greatly elevated natural language processing applications by delivering exceptional results in tasks like translation, question answering, and text summarization. However, LLMs grapple with a significant challenge, which is their slow inference speed that restricts their utility in real-time applications. This problem mainly arises due to memory bandwidth bottlenecks…

In this digital age, data has become a critical asset for businesses, driving strategic decision-making processes. However, the need to collaborate on data with external partners has increased the risk factors associated with security breaches and privacy concerns. Traditional data sharing methods entail the risk of sensitive data exposure, raising challenges to effectively manage and…

Researchers from MIT have been using a language processing AI to study what type of phrases trigger activity in the brain's language processing areas. They found that complex sentences requiring decoding or unfamiliar words triggered higher responses in these areas than simple or nonsensical sentences. The AI was trained on 1,000 sentences from diverse sources,…

Task mining is a new tool that modern businesses are using for efficient employee performance tracking, achieving productivity, and profitability. The process automatically captures and analyses user interactions within different systems and identifies bottlenecks, inefficiencies, and areas of improvement.

Task mining captures user interactions with various software applications, providing insights into how tasks are accomplished. It…

Today's healthcare landscape is inundated with vast amounts of patient data due to the digitalization of healthcare. However, a large issue exists as much of this data remains unstructured, leading to difficulties in extracting valuable insights. This problem is discussed in detail in the segment of our whitepaper, The Clinical AI Scorecard: How Different Integration…

The rise of Artificial Intelligence (AI)-generated music available on streaming platforms such as Spotify is causing concern, particularly for those working in the music industry. AI tools such as Udio, Suno, and Limewire allow the creation of naturally-sounding music, which can then easily be uploaded and monetised. While music production has long been democratised, former…