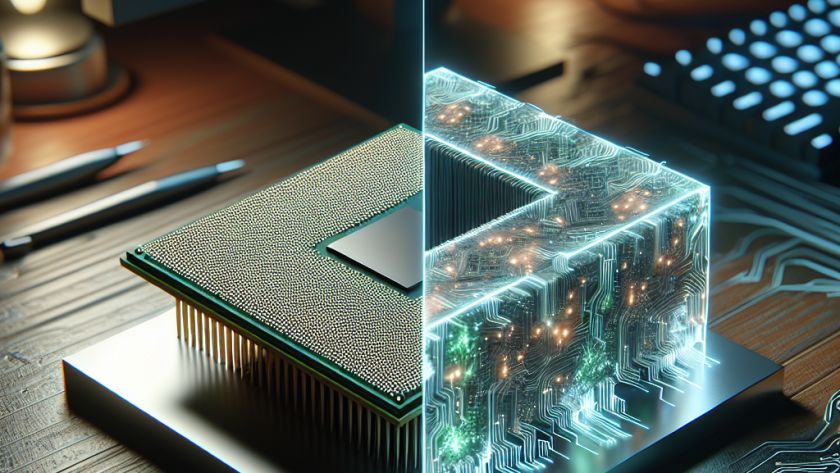

HuggingFace researchers have developed a new tool called Quanto to streamline the deployment of deep learning models on devices with limited resources, such as mobile phones and embedded systems. The tool addresses the challenge of optimizing these models by reducing their computational and memory footprints. It achieves this by using low-precision data types, such as…